Finally, the final version of my paper appeared in Elsevier. This is my second paper in Speech Communication Journal (currently it is Q1, IF: 2.0, CiteScore:4.8, h5-index: 28, google top 20). This one is open access in contrast to the previous subscription paper. Here is the link. For short descriptions, you can read through this passage.

https://doi.org/10.1016/j.specom.2022.03.002

Abstract

Speech emotion recognition (SER) is traditionally performed using merely acoustic information. Acoustic features, commonly are extracted per frame, are mapped into emotion labels using classifiers such as support vector machines for machine learning or multi-layer perceptron for deep learning. Previous research has shown that acoustic-only SER suffers from many issues, mostly on low performances. On the other hand, not only acoustic information can be extracted from speech but also linguistic information. The linguistic features can be extracted from the transcribed text by an automatic speech recognition system. The fusion of acoustic and linguistic information could improve the SER performance. This paper presents a survey of the works on bimodal emotion recognition fusing acoustic and linguistic information. Five components of bimodal SER are reviewed: emotion models, datasets, features, classifiers, and fusion methods. Some major findings, including state-of-the-art results and their methods from the commonly used datasets, are also presented to give insights for the current research and to surpass these results. Finally, this survey proposes the remaining issues in the bimodal SER research for future research directions.

Method

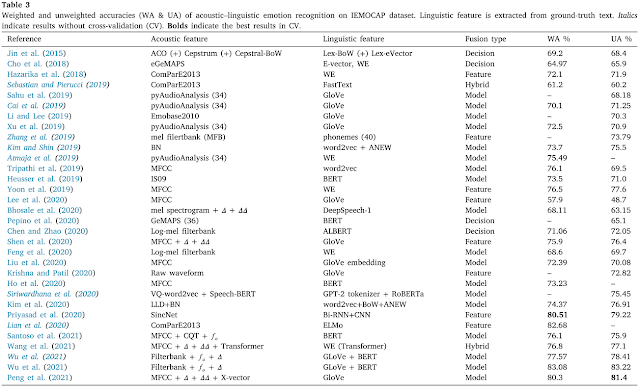

Result

Take home message

- There are several challenges listed in the paper

- Can we extract linguistic information without text? Yes, it can. Read here (actually it is included in the challenges part of my review paper, and someone already did it).

Citation

Atmaja, B. T., Sasou, A., & Akagi, M. (2022). Survey on bimodal speech emotion recognition from acoustic and linguistic information fusion. Speech Communication, 140, 11–28. https://doi.org/10.1016/j.specom.2022.03.002