I tried to add a recipe to Nkululeko for pathological voice detection using the Saarbrueken Voice Database (SVD, the dataset currently perhaps cannot be downloaded due to a server problem). Using Nkululeko is easy; I just need two working days (with a lot of play and others) to process the data and get the initial result. The evaluation was mainly measured with F1-Score (Macro). The initial result is an F1-score of 71% obtained using open smile ('os') and SVM without any customization.

On the third day, I made some modifications, but I am still using the 'os' feature (so I don't need to extract the feature again, using the same 'exp' directory to boost my experiments). The best F1 score I achieved was 76% (macro). This time, the modifications were: without feature scaling, feature balancing with smote, and using XGB as a classifier. My goal is to obtain an F1 score of 80% before the end of the fiscal year (March 2025).

Here is the configuration (INI) file and a sample of outputs (terminal).

Configuration file:

[EXP]

root = /tmp/results/

name = exp_os

[DATA]

databases = ['train', 'dev', 'test']

train = ./data/svd/svd_a_train.csv

train.type = csv

train.absolute_path = True

train.split_strategy = train

train.audio_path = /data/SaarbrueckenVoiceDatabase/export_16k

dev = ./data/svd/svd_a_dev.csv

dev.type = csv

dev.absolute_path = True

dev.split_strategy = train

dev.audio_path = /data/SaarbrueckenVoiceDatabase/export_16k

test = ./data/svd/svd_a_test.csv

test.type = csv

test.absolute_path = True

test.split_strategy = test

test.audio_path = /data/SaarbrueckenVoiceDatabase/export_16k

target = label

; no_reuse = True

; labels = ['angry', 'calm', 'sad']

; get the number of classes from the target column automatically

[FEATS]

; type = ['wav2vec2']

; type = ['hubert-large-ll60k']

; type = []

type = ['os']

; scale = standard

balancing = smote

; no_reuse = True

[MODEL]

type = xgb

Outputs:

$ python3 -m nkululeko.nkululeko --config exp_svd/exp_os.ini

DEBUG: nkululeko: running exp_os from config exp_svd/exp_os.ini, nkululeko version 0.88.12

DEBUG: dataset: loading train

DEBUG: dataset: Loaded database train with 1650 samples: got targets: True, got speakers: False (0), got sexes: False, got age: False

DEBUG: dataset: converting to segmented index, this might take a while...

DEBUG: dataset: loading dev

DEBUG: dataset: Loaded database dev with 192 samples: got targets: True, got speakers: False (0), got sexes: False, got age: False

DEBUG: dataset: converting to segmented index, this might take a while...

DEBUG: dataset: loading test

DEBUG: dataset: Loaded database test with 190 samples: got targets: True, got speakers: False (0), got sexes: False, got age: False

DEBUG: dataset: converting to segmented index, this might take a while...

DEBUG: experiment: target: label

DEBUG: experiment: Labels (from database): ['n', 'p']

DEBUG: experiment: loaded databases train,dev,test

DEBUG: experiment: reusing previously stored /tmp/results/exp_os/./store/testdf.csv and /tmp/results/exp_os/./store/traindf.csv

DEBUG: experiment: value for type is not found, using default: dummy

DEBUG: experiment: Categories test (nd.array): ['n' 'p']

DEBUG: experiment: Categories train (nd.array): ['n' 'p']

DEBUG: nkululeko: train shape : (1842, 3), test shape:(190, 3)

DEBUG: featureset: value for n_jobs is not found, using default: 8

DEBUG: featureset: reusing extracted OS features: /tmp/results/exp_os/./store/train_dev_test_os_train.pkl.

DEBUG: featureset: value for n_jobs is not found, using default: 8

DEBUG: featureset: reusing extracted OS features: /tmp/results/exp_os/./store/train_dev_test_os_test.pkl.

DEBUG: experiment: All features: train shape : (1842, 88), test shape:(190, 88)

DEBUG: experiment: scaler: False

DEBUG: runmanager: value for runs is not found, using default: 1

DEBUG: runmanager: run 0 using model xgb

DEBUG: modelrunner: balancing the training features with: smote

DEBUG: modelrunner: balanced with: smote, new size: 2448 (was 1842)

DEBUG: modelrunner: {'n': 1224, 'p': 1224})

DEBUG: model: value for n_jobs is not found, using default: 8

DEBUG: modelrunner: value for epochs is not found, using default: 1

DEBUG: modelrunner: run: 0 epoch: 0: result: test: 0.771 UAR

DEBUG: modelrunner: plotting confusion matrix to train_dev_test_label_xgb_os_balancing-smote_0_000_cnf

DEBUG: reporter: Saved confusion plot to /tmp/results/exp_os/./images/run_0/train_dev_test_label_xgb_os_balancing-smote_0_000_cnf.png

DEBUG: reporter: Best score at epoch: 0, UAR: .77, (+-.704/.828), ACC: .773

DEBUG: reporter:

precision recall f1-score support

n 0.65 0.76 0.70 67

p 0.86 0.78 0.82 123

accuracy 0.77 190

macro avg 0.76 0.77 0.76 190

weighted avg 0.79 0.77 0.78 190

DEBUG: reporter: labels: ['n', 'p']

DEBUG: reporter: Saved ROC curve to /tmp/results/exp_os/./results/run_0/train_dev_test_label_xgb_os_balancing-smote_0_roc.png

DEBUG: reporter: auc: 0.771, pauc: 0.560 from epoch: 0

DEBUG: reporter: result per class (F1 score): [0.703, 0.817] from epoch: 0

DEBUG: experiment: Done, used 11.065 seconds

DONE

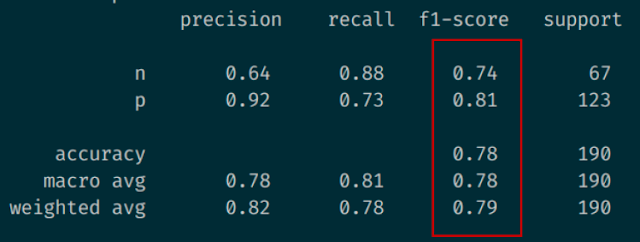

Update 2024/08/30:

- Using praat + xgb (no scaling, balancing: smote) achieves higher F1-score, i.e., 78% (macro) and 79% (weighted)