This article documents my steps to extract acoustic features with "emobase" configuration on opensmile-python under Windows. I used WSL (Window Sub-System for Linux) with Ubuntu Latest (20.04). Click each image for larger size and clarity.

0. Windows Version

Here is my Windows version in which I experimented with. Other versions may give errors. To show your version, simply press the Windows button and type "about PC".

Edition Windows 10 Pro

Version 20H2

Installed on 4/2/2021

OS build 19042.1083

Experience Windows Feature Experience Pack 120.2212.3530.0

1. Activate WSL2

Here are the steps to activate WSL2 on Windows 10. WSL2 only works on Windows 10 version 1903 or higher, with Build 18362 or higher. For the older version, you can use WSL instead of WSL2.

a. Activate WSL using PowerShell. Press the Windows key, and enter the following.

dism.exe /online /enable-feature /featurename:Microsoft-Windows-Subsystem-Linux /all /norestart

b. Install Linux kernel update package. Download from here.

https://wslstorestorage.blob.core.windows.net/wslblob/wsl_update_x64.msi

Double click and install that .msi package.

Select WSL2 as default.

wsl --set-default-version 2

You need to ensure the wsl version after installing Ubuntu distro below.

2. Install Ubuntu

Press windows key and type "Microsoft Store". I choose Ubuntu (latest) instead of Ubuntu 20.04 or other versions.

See image below; I already installed it.

Ensure that Ubuntu uses WSL2 as default. Check-in PowerShell with the following command (wsl -l -v).

Then click launch Ubuntu from the previous image/step, or you can type "Ubuntu" di search dash.

When launching Ubuntu for the first time, you will be prompted for the user name and password. Remember this credential. See the image below for example.

3. Install Python and pip

In Ubuntu do/type

sudo apt update && sudo apt -y upgrade

Enter your password. Type "y" when it is prompted.

Install Python using apt. I chose python3.7 as follows.

sudo apt install python3.7-full

Type "y" when it asked. See the image below for reference.

Test if the installation is successful. Type "python3.7" in Ubuntu to enter python3.7 console.

Next, we need pip to install python packages. Hence, we need to install pip first as follows.

python3.7 -m ensurepip --upgrade

4. Install Python-Opensmile

Since this version of python in Ubuntu is already equipped with pip, we can directly use it to install opensmile.

python3.7 -m pip install opensmile

See the image below for a reference.

Same as previous step, I installed IPython for my convenience. You may also need to install numpy, scipy, and matplotlib.

python3.7 -m pip install ipython numpy scipy audb

We also need to install sox since it is required by opensmile

sudo apt install sox

5. Extract Emobase Feature

Now is the time to use opensmile. First, open IPython console for this python3.7.

python3.7 -m IPython

Import Opensmile and download emodb dataset with a specific configuration.

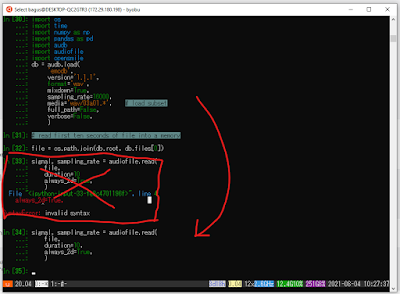

See the image below for your reference. Skip the parts with red cross since they contain errors (I forgot to add a comma between arguments).

Configure opensmile to extract EMOBASE feature.

smile = opensmile.Smile(

feature_set=opensmile.FeatureSet.emobase,

feature_level=opensmile.FeatureLevel.Functionals,

)

smile.feature_names

See image below for your reference. You can change feature_level value to "opensmile.FeatureLevel.LowLevelDescriptors" if you want LLD (LowLevelDescriptors, extracted per frame) instead of functionals (statistics of LLD). The number of emobase functional is 988 features [len(smile.features_names)].

Finally, we extract acoustic features based on these configuration.

smile.process_signal(

signal,

sampling_rate

)

See below image for your reference.

That's all. Usually, I save the extracted acoustic features in other format like numpy .npy files or .csv files. From my experience, this is my first extraction of emobase feature set. Previously I used gemaps, egemaps, compare2016, and emo_large configuration. Let see if this kind of feature set has advantages over others.

Although intended for Windows 10, this configuration may also works for other distribution. Still, I prefer to use Ubuntu since the process is simple and straightforward. No need to set WSL2 and other things just pip and pip.

The full script to extract emobase functional features from all utterances in emodb dataset is given below. Please note that it takes a long time to process since it will download all utterances in emodb dataset according to "audb" format and extract acoustic features from them.

Example 1: Extract emobase feature from an excerpt of emodb dataset and save it as an .npy file.

import os

import time

import numpy as np

import pandas as pd

import audb

import audiofile

import opensmile

sr = 16000

# if you change code below, it will download the dataset again

db = audb.load(

'emodb',

version='1.1.1',

format='wav',

mixdown=True,

sampling_rate=sr,

full_path=False,

verbose=True,

)

smile = opensmile.Smile(

feature_set=opensmile.FeatureSet.emobase,

feature_level=opensmile.FeatureLevel.Functionals,

)

# If you run this program for the second time

# comment the whole db above and change db.root and db.files to (uncomment)

# db_root = audb.cached().index[0]

# db_files = pd.read_csv('/home/bagus/audb/emodb/1.1.1/fe182b91/db.files.csv')['file']

feats = []

for i in db.files:

file = os.path.join(db.root, db.files[i])

signal, _ = audiofile.read(

file,

always_2d=True,

)

feat = smile.process_signal(

signal,

sr

)

feats.append(feat.to_numpy().reshape(-1))

# this will save all emodb emobase feature in a single npy file

# make sure you have 'data' dir first

np.save('data/emodb_emobase.npy', feats)

Example 2: Extract emobase features from files under a directory ("ang") and save it in a csv file.

import os

import opensmile

import numpy as np

import glob

#from scipy.io import wavfile

# jtes angry path, 50 files

data_path ="/data/jtes_v1.1/wav/f01/ang/"

files = glob.glob(os.path.join(data_path, "*.wav"))

files.sort()

# initiate opensmile with emobase feature set

smile = opensmile.Smile(

feature_set=opensmile.FeatureSet.emobase,

feature_level=opensmile.FeatureLevel.Functionals,

)

smile.feature_names

# read wav files and extract emobase features on that file

feat = []

for file in files:

print("processing file ... ", file)

#sr, data = wavfile.read(file)

#feat_i = smile.process_signal(data, sr)

feat_i = smile.process_file(file)

feat.append(feat_i.to_numpy().flatten())

# save feature as a csv file, per line, with comma

np.savetxt("jtes_f01_ang.csv", feat, delimiter=",")

If you face problems during following this article, let me see in comments below.

Reference:

[1] https://docs.microsoft.com/en-us/windows/wsl/install-win10

[2] https://audeering.github.io/opensmile-python/usage.html